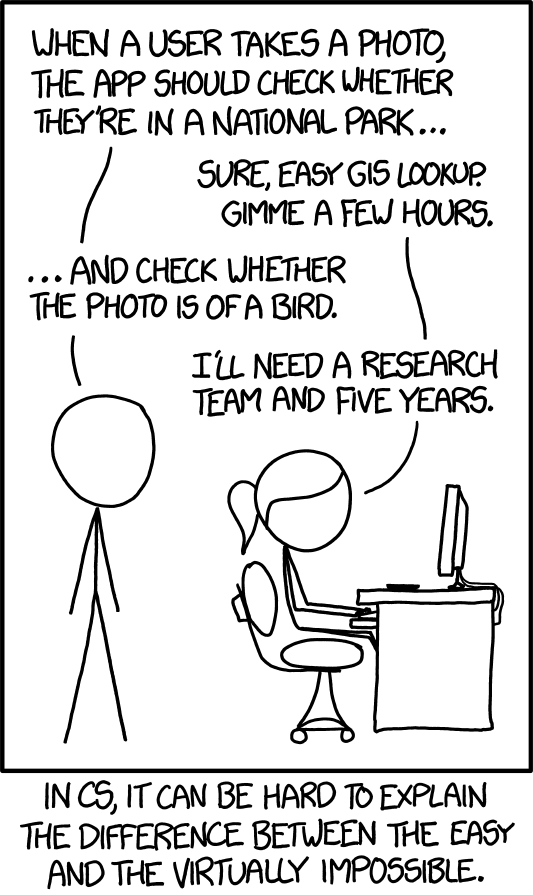

We, as humans, can accurately and precisely tell whether a picture depicts a bird or not by just having a quick glimpse at it. Computers, on the other hand, can hardly see anything meaningful in the collection of 1’s and 0’s that make a virtual image. Not long ago, these kind of visual recognition tasks that humans naturally excel at were extremely difficult for computers to even attempt to do.

xkcd.com/1425 (CC BY-NC 2.5)

In 2012, a group of scientists from the University of Toronto had a break-through. They built a type of neural network designed to classify pictures amongst 1,000 different classes, birds included, surpassing by a long-shot the results achieved by previous methods. This research paved the way to more complex architectures from Google, Oxford, Microsoft and many others that would become the basis for entirely new applications.

Data-hungry algorithms

Deep Convolutional Neural Networks (DCNN) are neural networks taken to the extreme. Having dozens to hundreds of layers, each with thousands of neurons, they are the key ingredient to the success in many recent classification and segmentation challenges.

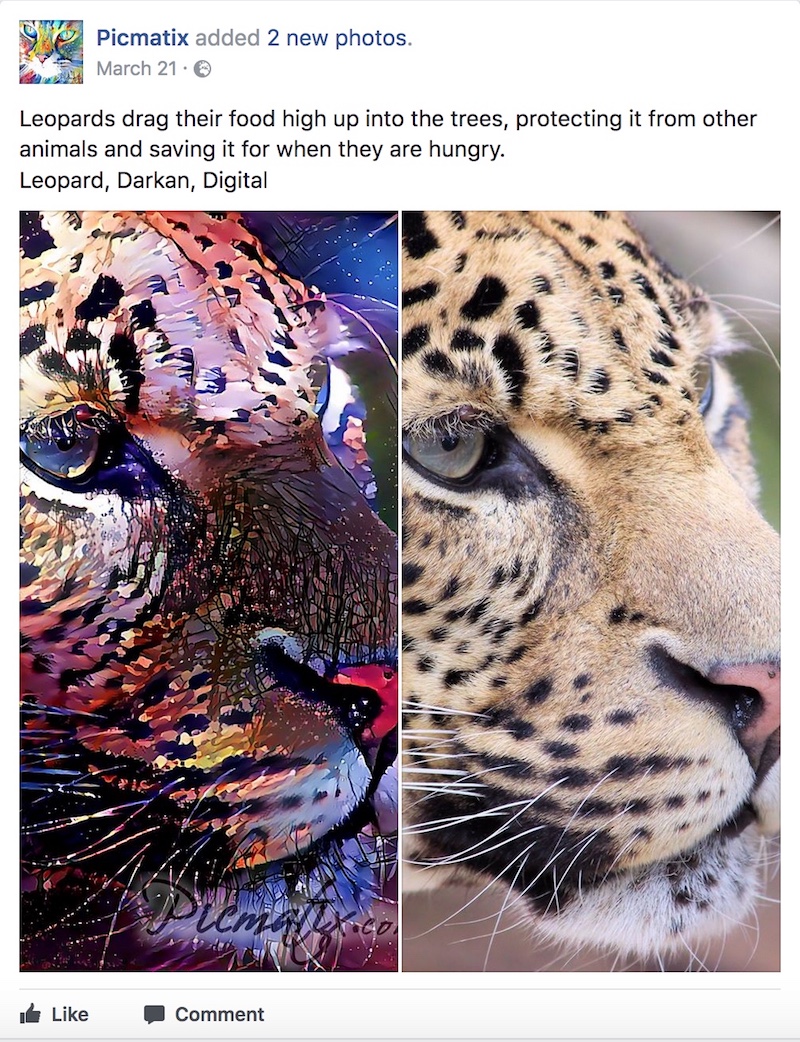

These deep neural networks can be difficult and slow to train from scratch and require enormous amounts of data (ImageNet has about 1.2 million human-labeled images for the Object localization challenge). For this reason, researchers have developed techniques to leverage trained networks that classify trucks, planes, dogs, and cats, for them to be used in entirely different applications such as detecting eye diseases or generating art. This is called Transfer Learning.

With this method, some Computer Vision models can be finetuned with as little as 20K manually labeled images to distinguish dogs from cats with 99% accuracy. One could argue this is still a lot of data, but it is almost nothing when compared to the more than 1 million manually labeled images required to train a neural network from scratch.

Left: computer generated artwork of a Leopard. Right: Original picture. Source: [picmatix.com]

Looking ahead

While it would seem we are reaching a plateau in terms of designing more accurate classification models. Other research directions are looking very promising:

-

On the one hand, reducing the need for human-annotated datasets with new architectures that can make accurate predictions with just a few examples (one-shot learning) has emerged as a very promising research direction.

-

On the other hand, Generative Models, neural networks capable of creating content that looks authentic to the human eye, have the potential to create entirely new lines of businesses. Don’t be surprised if your next favourite movie characters are imagined and created entirely by neural networks:

A Generative Adversarial Network (BEGAN) created these almost real-looking faces out of thin air. Source: https://arxiv.org/abs/1703.10717

While these are, by far, not the only interesting research directions. One thing is certain: we will see more and more neural networks embedded in our daily life. Neural networks are here to stay.